As you might know, I’m of one the people who promote the idea of having a Constantly Usable Testing (CUT). I will post a set of articles on this topic to help me clarify my ideas and to get some early feedback of other Debian contributors. I plan to use the result of this process to kickstart a broader discussion on debian-devel (where I hope to get the release team involved).

Let’s start by defining my own objectives with Debian CUT. I won’t speak of the part of Debian CUT that plans to make regular snapshots of testing with installable media because that’s not where I will invest my time. I do hope other people will do it though. Instead I will concentrate on changes that would improve Debian testing for end-users. I consider that testing (in its current form) is largely usable already but there are two main obstacles to overcome.

Testing without freezes = rolling

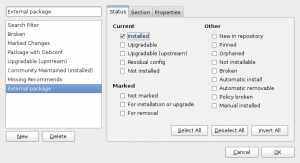

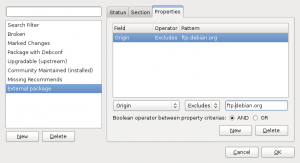

The first one is that testing is no longer testing during freezes. The regular flow of new upstream versions—that makes testing so interesting for many users—is stalled because we’re using testing to finalize the next stable version. That’s why I’d like to introduce a new suite called “rolling” that would work like the current testing except that it never freezes. Testing would no longer be a permanent suite, it would only exist during the freeze and it would be branched off from rolling.

Rolling should be a supported distribution

The second obstacle is not really technical. We must advertise rolling as a distribution that ordinary people can use but we should make it clear that it’s never going to have the same level of polish that a stable release can have. And to back up this assertion, we must empower Debian developers to be able to provide good support of their software in rolling, this probably means using “rolling-proposed-updates” more often to push fixes and security updates when the natural flow of updates is blocked by transitions or other problems. Some maintainers won’t have the time required to provide the same level of support as they currently do for a stable release and that’s okay, it’s not worse than the current testing. The goal is to treat it on a best-effort basis and to try to gradually improve the situation.

My goal for wheezy

This is the the minimal implementation of CUT that I would like to target in the wheezy timeframe. Having testing as a temporary branch of rolling is not a strict requirement, although I’d argue that it’s important to not waste resources towards maintaining two separate yet very similar distributions.

Should Debian embrace those goals?

Ignoring all possible problems that will surface while trying to implement those goals, can we agree that Debian should embrace those goals because it means providing a better service to a class of users that is not satisfied by the current stable release?

I will come back to the expected problems in a subsequent post and we will have the opportunity to discuss them. Here I just want to see whether we can have broad buy-in on the principles behind Debian rolling and CUT.

Adam D. Barratt is a Debian developer since 2008, in just a few years he got heavily involved to the point of being now “Release manager”, a high responsibility role within the community. He worked hard with the other members of the release team to make Squeeze happen.

Adam D. Barratt is a Debian developer since 2008, in just a few years he got heavily involved to the point of being now “Release manager”, a high responsibility role within the community. He worked hard with the other members of the release team to make Squeeze happen. Last week, we learned

Last week, we learned